You understand how the Newton-Raphson method works, but don’t know which \(x_0\) to use? I know exactly what you mean. So how do you find the best initial guess for Newton’s method?

There is no general answer that fits all concrete cases or problems. As a strategy, the following observations and steps help to identify a suitable initial guess \(x_0\) for finding a certain root:

- there is no best initial guess (that would be the root itself)

- instead, a suitable initial guess is needed

- if quickly possible, plot the function

- to compute a numerical approximation to a particular root, choose an initial guess close enough to that root

- close enough means a position \(x_0\), from which the algorithm will not jump to another root – however, this is not possible for particular kinds of roots

- if evaluation of the function is expensive and/or computing its derivative is not directly possible, start at the most reasonable value for \(x_0\) that you can think of

- in such a case, use evaluated function values for finding the root and simultaneously plotting the function to get a better understanding of its behavior.

- use auxiliary or alternative methods, if necessary

This summary gives you the most important ideas, but there is a lot to understand here, so let’s go over all of this again, step by step and in due detail.

The role of the initial guess \(x_0\) in Newton’s method

Newton’s method, also called the Newton-Raphson method, is used to numerically approximate a root of a function \(f(x)\) of a variable \(x\) by a sequence of steps \(x_i\) (the first of which is \(x_0\)). Ideally, \(f(x_i)\) approaches zero such that the desired equation

\[f(x)=0\]

is approximated with the desired accuracy.

The method is iterative and uses both the function \(f(x)\) as well as its first derivative \(f'(x)\) in order to find a root, one step at a time. In each iteration step, we start at some \(x_i\) and get to the next approximation \(x_{i+1}\) via the construction

\[x_{i+1}=x_i – \frac{f(x_i)}{f'(x_i)}\]

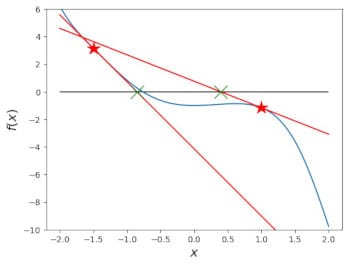

The choice of \(x_0\) determines, where the iteration starts. The particular choice for this initial guess is important, because it may work nicely, but just as well lead to a failure of the method, depending on the particular example.

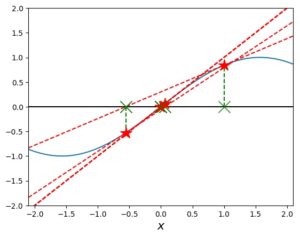

In the picture you can see a preview of this, but if you’d like to know more about the Newton-Raphson method before we dive into the initial-guess discussion, I recommend that you take a look at my articles Newton’s Method Explained: Details, Pictures, Python Code and Highly Instructive Examples for the Newton Raphson Method, here on ComputingSkillSet.com.

Finding an initial guess to demonstrate how Newton’s method works vs. to solve an actual problem

Before we further discuss how to actually make an initial guess for Newton’s method, it is important to understand the following: explanations of this problem sometimes suffer from not making the following difference clear enough:

- finding an initial guess in a demonstration of Newton’s method or

- picking a suitable initial guess for Newton’s method when solving an actual or real-world problem

The essential difference is that in most cases where we need to solve an actual problem, we do not know a lot about the function. It may take a substantial amount of computing time to compute the function at a single point, so that there is no quick way to plot it. We may have no idea as to where and how many the roots are. We may not even know for sure that the function actually has roots.

Let’s keep this in mind when I describe the different strategies below. I would like to keep the discussion as general as possible. As a result, I’ll state various assumptions (like that we can quickly plot the function) and then investigate what can be done. However, the basic idea should always be that we don’t know anything (else).

How failure of Newton’s method can depend on the initial guess

In my article Newton’s Method Explained: Details, Pictures, Python Code, I describe the scenarios for failure of the Newton-Raphson method in detail, and in my article Highly Instructive Examples for the Newton Raphson Method, I show examples for each of theses cases. In short, they are:

- at some point during the iteration, a point \(x_i\) with \(f'(x_i)\) is hit by the algorithm. There the denominator in the expression above vanishes and the algorithm cannot continue.

- the sequence \(x_i\) runs away towards an asymptotic region of the function

- the sequence \(x_i\) oscillates between two points or regions

- there is no root of \(f(x)\) at all

- at one (or more) of the roots, \(f(x)\) rises more slowly than the square root of \(x\)

In the last two of these cases, the initial guess is irrelevant. Newton’s method diverges for all initial guesses. If you’d like to see examples for these cases, you can jump to the corresponding examples in my article Highly Instructive Examples for the Newton Raphson Method.

For the first case on the list above, it is somewhat obvious but important to note that we should avoid zeros in the function’s first derivative as choices for \(x_0\). If such a zero in \(f'(x)\) appears for a later \(x_i\), a slight change in \(x_0\) should be enough to avoid it.

For the oscillatory case, we must change \(x_0\) such that entering the regions, between which the method oscillates, is avoided. Most importantly, \(x_0\) must lie outside any such region. There is an example for this in Highly Instructive Examples for the Newton Raphson Method.

For the asymptotic case, we need to make sure that \(x_0\) is outside the branch of the function, which has the asymptotic behavior. This example is harder to imagine, but there are plots and details in my article Highly Instructive Examples for the Newton Raphson Method.

In my experience, it is unlikely to hit a single exact point with a numerical algorithm. So, in general you’ll likely not get a zero derivative or an oscillation between single points in the middle of the sequence of the \(x_i\). The other cases can happen, but will clearly show up in the sequence for you, if you know what convergence looks like for the \(x_i\) in the Newton-Raphson method. As soon as you see oscillation and relative differences that don’t really get smaller for 50 points or more, you should get suspicious.

So, if Newton’s method diverges for a particular \(x_0\), check for asymptotic or oscillatory regions, for roots weaker than the square root (let’s call them “not-well-behaved” for the present purposes), and whether or not your function actually has roots. Choose another \(x_0\) and run the iteration again.

One particular aspect of the stability of Newton’s method is the following:

Stability of Newton’s method regarding the choice of the initial guess

In the previous section I told you about all the things that can go wrong when working with Newton’s method in terms of a sequence of the \(x_i\) that won’t converge as expected. Most of these depend on \(x_0\) and can be cured, if an appropriate \(x_0\) is chosen. However, there is an additional and different kind of instability triggered by the choice of \(x_0\):

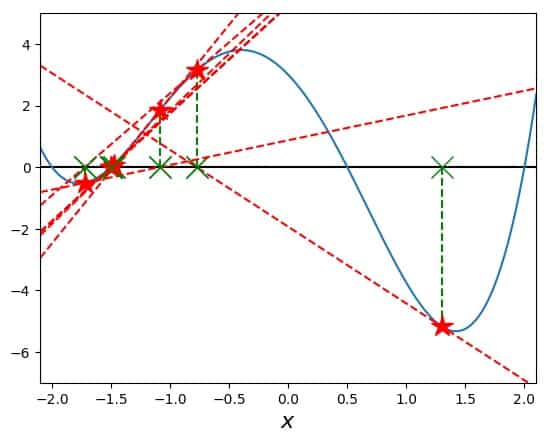

In this other case, the sequence does converge, but not necessarily towards the root we had in mind. What does that mean? In order for this to play a role, the function under consideration has to have at least 2 different and well-behaved roots. Then, it depends on the starting point \(x_0\), to which of these two roots the Newton-Raphson algorithm converges.

In general, starting close to each root results in convergence to that root, while the outcome is not clear when starting at some random \(x_0\). For sufficiently complex functions, this behavior can be fractal. This is, in fact, a way to generate fractals, which are described in detail in my article Newton Fractals Explained: Examples and Python Code.

In summary, this means that

- for more complex functions with several roots, it is necessary to be close enough to the root, for which an approximation is needed via the Newton-Raphson method

- the Newton-Raphson method can have a cool but maybe unwanted fractal behavior on the initial guess

- in addition, there can be regions of divergence as a function of the initial guess

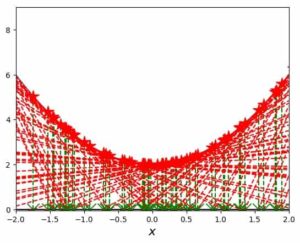

As a result, the idea to use a grid of initial guesses (as you would in order to get the picture of a Newton fractal), is very useful in terms of finding different roots of the function under investigation:

Using a grid of initial guesses for Newton’s method

So the first concrete strategy to define an initial guess for Newton’s method is to actually use more than one at the same time: use a grid of initial guesses. In order for us to be able to do this, it must be reasonably cheap to evaluate the function and its derivative. Basically, this is the case for any function that can be written down in a closed form (with formulas involving known functions).

The resulting approximations for roots of the function should be recurring. More precisely, for those cases (initial-guess grid points) where Newton’s method converges, we can usually identify several results to be the same within a certain precision. So, every number (result) that looks the same to, e.g., six significant digits is considered the same.

In this way, we usually get a handful of roots together with suitable values of initial guesses \(x_0\) that produced each of them. Thus, this strategy effectively solves the question how we can find an initial guess for each root, unless the one we are looking for is missing from the set of results. However, if the interval for the grid of \(x_0\) values includes the desired root, our desired root is well-behaved, and the grid points are numerous enough, there should not be a problem.

One quick note, however, gets us back to the argument of demonstration vs. real life: this doesn’t work, if the function and/or its derivative are expensive or impossible to compute numerically. We’ll get to these situations in a minute. Before that, some more ideal situations:

Picking an initial guess for Newton’s method, if you can quickly plot the function

If you can quickly plot the function, for which you’d like to find a root using Newton’s method, then:

- do that and look at the plot

- check for approximate values of the roots by inspecting the function graph’s intersections with the x-axis

- use a starting value \(x_0\) for which you can see the tangent to the curve staying close to the curve

- run Newton’s method starting at an \(x_0\) that appears to be slightly left of the root

- if the run does not converge to the desired root, but jumps away, start another run from an \(x_0\) that appears to be slightly right of the root

- you should have a satisfactory result

If this tactic fails, the root may be not-well-behaved, i.e., the function rises more slowly than the square root around the root. In such a case, Newton’s method diverges away from this root, no matter how close your \(x_0\) may have already been to the root in the first place.

Even worse, the function might do something pathological (= really weird) close to the root that the plot doesn’t show. In such a case, zoom in with the plot an inspect on a smaller scale around the root. Choose points for \(x_0\) that appear even closer than the ones before.

The initial guess for Newton’s method, if there is only one root

This case is rather simple in the following sense: if you find a converged result for Newton’s method, you are done. If you know (or see in the graph) that the function has only one root, you can basically choose whatever you want for \(x_0\) and run it.

As long as you don’t run into an asymptotic or oscillatory behavior, any point on the x-axis should do. If this one root is not-well-behaved, Newton’s method cannot be used to approximate it further (use an alternative method instead).

In fact, this tactic is also a legitimate try for any function that you know nothing about. Just pick any \(x_0\) and run Newton’s method from that initial guess. In a lot of cases, you’ll end up at a root of the function. In general, that root may not be the only one, so keep your eyes open, but it’s a good start.

How to use Newton’s method and find an initial guess, if the function is only given numerically

If we don’t have a closed form for the function that we are investigating, we usually either have numerical data or another numerical way to compute the values of \(f\) for a particular argument.

This happens often in scientific or engineering applications, where an equation’s solution yields the value of the function, and the function’s variable is a parameter in the equation. This is not easy to understand, so let’s look at an example.

Imagine that we’d like to know, which positive value for \(a\) solves the following equation:

\[\int_0^\infty dx\; x \exp (-a x)=4\]

Yes, I know that this integral can be solved analytically, but let’s assume that we can’t. In that case, we could use Newton’s method to solve for \(a\) by defining

\[f(a) = \int_0^\infty dx\; x \exp (-a x)-4\]

The root of this function of the variable \(a\) is the desired solution. In order to use Newton’s method, we’d have to pick a value for \(a\), solve the original equation (i.e., compute the integral over x), and thus calculate the value of \(f(a)\) for a couple of values of \(a\).

One difficulty with this is that in general there probably won’t be a direct way to calculate the derivative of \(f(a)\) with respect to \(a\). In order to circumvent this, we would have to use a secant (or a combination of secants ) instead of the tangent at a given point \(a_i\) in the Newton sequence.

) instead of the tangent at a given point \(a_i\) in the Newton sequence.

Another possible difficulty is that, to begin with, we basically have no idea what the function \(f\) looks like. If in addition it is computationally expensive to compute a function value, it may take a while to get an idea of the function’s shape, its properties, and the possible locations of its roots. Still, we can go ahead, start somewhere, and compute a sequence along the line of Newton’s method.

In addition to using the sequence to try to approximate one of the roots, we could then use the computed function values to start plotting the function. Another possible strategy is to investigate the asymptotics of the function, e.g. with these questions:

- What is the function’s behavior, when the argument tends to positive and negative infinity?

- Can we find any singularities in the function?

- Is there a way to make general statements about the function’s values like bounds, limits, and the like?

In case of doubt, and without any knowledge about the function under investigation, it is best to simply start with a reasonable value for the initial guess, and run the Newton-Raphson algorithm. Improve your guess as you move along and gain more knowledge, if necessary.

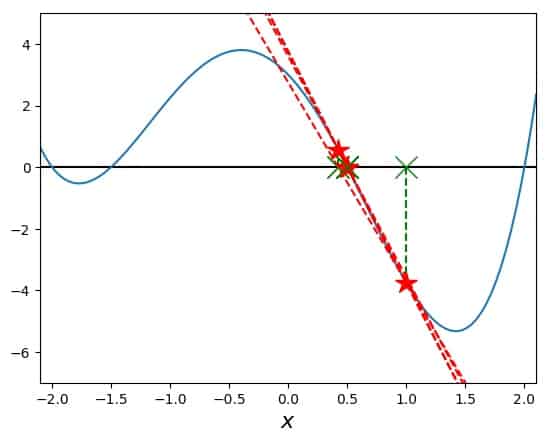

Finding an initial guess for Newton’s method for a periodic function

There is no principal problem with using Newton’s method to find roots of a periodic function. However, in practice we have to remember that the roots of a periodic function are periodic as well. What this means is basically that very likely there is an infinite number of them.

Now, let’s remember our discussion of the Newton-Raphson algorithm jumping from one root to another. For a periodic function, this is pretty common, and the regions of small function slope are periodic as well. So, caution is advised and initial guesses should be picked as close to a root as possible.

The method using a grid works well in this case. The sine function, for example, yields a very nice example for a Newton fractal. Still, we see in those fractal figures that the regions where Newton’s method converges to one and the same root are comfortably large. So we can expect the method to work as soon as our choice for the initial guess is reasonable and the root is well-behaved.

If you know that your function is periodic, but not what it looks like, plot as much of it as the computational cost allows. Use the points you have to decide which root to aim at, and place your initial guess as close to the root as you can.

Using intervals and averages to determine a suitable initial guess for Newton’s method

In a couple of places, I found the suggestion to build intervals around the roots and then use averages of the interval boundaries as initial guesses inside each interval. While this is a helpful strategy, it has to be used with caution.

In particular, this brings me back to the issue with demonstrations of Newton’s method. These are mostly done using polynomials. And while there is nothing wrong with polynomials, because they appear often and are important, they are also very, very, very well-behaved.

So, if you struggle with finding a suitable initial guess for a real-world problem, and you suspect that it is not simply a polynomial, here are a few guidelines for using intervals and averages:

The basic idea is to construct intervals \([a_i,b_i]\) around each root \(r_i\) such that a suitable initial guess is given by the arithmetic mean of the interval boundaries as

\[x_0=\frac{a_i+b_i}{2}\]

As I already mentioned, this will work well most of the time. The usual situation for this technique is: the function values \(f(a_i)\) and \(f(b_i)\) have opposite sign, which indicates a root in the interval. Unless, of course, there is a singularity (i.e., the function is not continuous in the entire interval).

Furthermore, there can be more than one root in the interval (and a singularity, but let’s ignore that for the moment). In fact, for opposite signs of \(f(a_i)\) and \(f(b_i)\), there could be any odd number of roots inside the interval, if we didn’t look closely enough in the first place.

Another problem is that the root is very close to one of the interval boundaries, and that Newton’s method jumps from the arithmetic mean directly outside the interval. It really depends on everything that the function does inside such an interval.

So, this method may indeed help you find a nice initial guess, if you can quickly plot the function and see exactly where to set the interval boundaries. But, to be honest, in such a case, you might as well choose an initial guess close to one of the roots (because you can see where they are!) and proceed without wasting time on constructing intervals in the first place.

Don’t worry about the time it takes to go the extra couple of iteration steps, because in a situation like this, everything is fast: computing the function (because you can quickly plot it), as well as computing its derivative (if you don’t have a closed expression for the derivative, just use a standard numerical derivative , which only requires a handful function values, too).

, which only requires a handful function values, too).

Frankly, the few extra steps in the algorithm will take less time than it would have taken you to put your pencil on the paper and start drawing interval boundaries.

How to find the initial guess in Newton’s method – my conclusion

In summary and in short, you should do the following to find a suitable initial guess for your Newton-Raphson application:

- Use your best intuition for the initial guess and run Newton’s method right away to gain intuition about your problem.

- Plot as much of the function as you can. If feasible, also plot its derivative.

- Use a sensible grid of initial guesses and run Newton’s method starting from each of them. This will likely give you an approximation for a good number of roots of your function.

- Watch the sequence of \(x_i\) for signs of divergence (including oscillation).

- Always try to pick the initial guess as close to a root as possible.

- Set the maximum number of iteration steps to a reasonable (low, like 30) number.

With these in mind, approach your individual problem. If you are studying the Newton-Raphson method, challenge yourself to experience all the sorts of things it can do for yourself. My article Highly Instructive Examples for the Newton Raphson Method can help you start this process.

Further reading on the Newton-Raphson method

If you liked this explanation and strategies around the initial guess for Newton’s method, I encourage you to also look at my overview article for the method itself, Newton’s Method Explained: Details, Pictures, Python Code.

I personally really like the method’s extension to complex numbers and the resulting Newton fractals. Read on about these cool objects in my article Newton Fractals Explained: Examples and Python Code.

Fun explanations of the Newton-Raphson method

While the Newton-Raphson method can seem like very serious business at times, it is equally important to have some fun explaining it. If you are in for a try, read one of these: